In the big wide world of hard drives, SSDs, RAM, VRAM, fancy CPU tech, and more—we felt it was about time to have a bit of a deep dive into data transfer rates.

That way, even if you’re working from an example PC build in our main build chart, you’ll know exactly what kind of speed and overall bandwidth you’re getting for your money!

Where to start? The CPU of course! (CPU, CPU Cache)

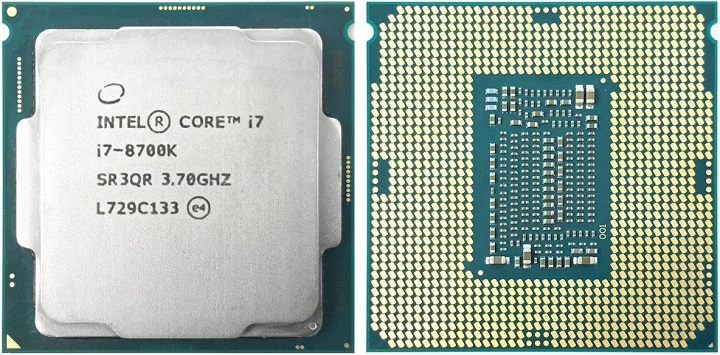

So this all starts with the CPU. Any other data on any other hardware is essentially trying to keep up with how fast your CPU can handle data. So that the CPU can handle such huge amounts of data, every modern CPU comes with some onboard data cache—which is designed to allow the CPU to rapidly utilize the data stored there. Now, just like your modern DDR4 RAM that you purchase, speed costs money, especially when we’re looking at cache speeds. For this reason, a current-generation CPU will come with 3 tiers of cache (usually labelled L1, L2, L3) and you’ll be lucky if a CPU comes with more than a few MB of L3 cache. Throughput on cache (how fast the data can move through the cache) is tied closely to instructions-per-clock-cycle as well as physical clock speed, but the theoretical data transfer rate bandwidth for L3 cache is a monstrous 175 GB per second (or 175,000 MB/s so that this makes sense with other numbers below). That’s why something like a shiny new i7-8700K only comes with 12MB of L3 cache. Anything more and frankly the cost of the CPU would skyrocket.

Why Infinity Fabric is Kinda Awesome

So although Intel labelled AMD’s Infinity Fabric as ‘glue,’ a lot of credit has to be given to AMD’s boffins who created this technology to allow the cores of their CPUs (like the new Ryzen 7 2700X) to effectively “talk” to each other quickly. Now this is a system that adjusts under the requirements of the CPU, but its performance starts at a data transfer rate of 30 GB/s and hits the heady heights of 512 GB/s (512,000 MB/s) at its upper bound. Essentially, this is how AMD manages to share cache over its 4 CPU clusters so efficiently—it can match the insane speeds of L3 cache for each CPU… yet CPU design is a whole other topic for another day!

You’re only supposed to—wait, wrong film. Photo by Keystone/Getty Images

System Memory (RAM)

Because of the high cost of cache for CPUs, a good “go-between” from standard storage types to the CPU and its cache came about in the form of DRAM. There’s been a number of improvements to the speeds of RAM over the years, thanks in no small part to the improvement of CPU speeds. As such, if you take some nice modern DDR4 RAM like this G.SKILL TridentZ kit, you’re generally looking at a peak data transfer rate of around 25,600 MB/s. So already you are looking at something in the computer that is quite a bit slower than the cache, although it’s still fast enough for the various programs which the CPU needs to run and for which the CPU needs to store data while the system is powered. Again, the CPU clock speed does play a part here, as DDR (Double Data Rate) transfers its data on both the rising and falling curves of the CPU clock frequency.

What about GDDR Memory? (VRAM)

So the same general rule of thumb applies to the VRAM used in graphics cards. There’s a number of grades of it available, of which some of the fastest (and readily available) is the GDDR5X which you’ll find in the likes of NVIDIA’s GTX 1080 and upwards. This GDDR5X VRAM has a data transfer rate of 45,640 MB/s. When compared to the fastest system memory DDR4 (25,600 MB/s), it becomes clear why the graphics card (with its super-fast memory) is making multiple dedicated GPUs popular for compute tasks like rendering, encoding, machine learning, and general mathematical research.

The dedicated GPU has become popular with professionals, thanks to its GDDR RAM setup to be used for stream processing. For certain mathematical problems which require single data items, using multiple GPU cores has proven to be faster and more cost effective than using standard CPU configurations. For NVIDIA, their platform called CUDA (Compute Unified Device Architecture) allows the GPU to use its GDDR RAM for a unified memory structure capable of scattered reads (that is, different parts of the data can be accessed in a random or at least varied order). With the advantage of being able to use multiple GPUs together on multiple PCIe expansion slots, the performance gain for compute tasks is classed as superior because the majority of standard motherboards pair their numerous PCIe lanes with just one CPU socket.

For more information on VRAM, check out our article explaining the different types that are available today.

This bad boy can handle so many PCIe lanes…

Now for the Motherboard’s PCIe Lanes (PCIe x1-x16)

There was once a nightmare time in the history of building PCs, where components used no standardized format to be installed onto a motherboard; there was no truly consistent way back then to know for certain if any given part would even work when you installed it… such good times. Thankfully, PCIe came along and made our lives as builders a whole lot simpler. You know how everyone says these days building a computer is like a very nerdy version of Lego? Well, a significant part of that analogy has this standard to thank.

So, each PCIe “lane” has two pairs of wires—one pair to send data and one pair to receive data. When you see a PCIe prefix like “x4”, that means the device in question needs 4 lanes for it’s maximum data bandwidth. Now, we are currently using PCIe 3.0 and (as can often happen with standards) issues with 4.0 back in 2013 means manufacturers are aiming to jump straight to the 5.0 standard in 2019. However, for now, the 3.0 standard gives the following data transfer rates, based on the number of lanes used:

x1 | x2 | x4 | x8 | x16 |

984.6 MB/s | 1,970 MB/s | 3,940 MB/s | 7,900 MB/s | 15,800 MB/s |

Speed is one of the big improvements coming with the 5.0 standard, as it should allow for four times the bandwidth of 3.0!

This is also why it’s a good idea to check how many PCIe lanes your CPU and motherboard (with the onboard chipset) can provide you.

For example, I have an i7-8700K with 2x Samsung 960 Pro drives (you can get the new 970 Pro for cheaper right now), as well as a GigaByte AORUS GTX 1080 Ti all running on an Asus ROG Strix Z370-F motherboard (see: so many manufacturers working nicely together, thanks to PCIe).

So the 8700K by itself allows for various lane combinations (1×16, 2×8, 1×8+2×4), and it has 16 total available. Combined with the Z370 chipset, it’s a maximum of 24 lanes—so another 8 thanks to the chipset. Where things get interesting is for maximum output, the GTX 1080 Ti gobbles up all 16 lanes, effectively meaning my 2 960 Pro drives only really get their bandwidth thanks to the chipset! Now, if I installed any other PCIe device into the board, it would most likely drop the 1080 Ti down to only using 8 lanes; but this only theoretically limits the data transfer rates of these devices, because you’re so rarely running everything at 100% at all times anyway.

What this Means for M.2 Drives (M.2 SSD)

If you aren’t aware, M.2 hard drives utilize PCIe lanes on motherboards for the NVMe Interface in order to get up to the insane speeds of their flash memory. Each M.2 drive utilizes 4 PCIe lanes to send and receive data to its onboard flash. So if you take a brand new drive like the Samsung 970 EVO 500GB, this is why they can advertise their drive having, “Read speeds up to 3,500 MB/s“. Their V-NAND flash is capable of that speed and—as you can see on the above table—the x4 bandwidth is enough to support this speed (and a little more).

Don’t Forget About Good ol’ SATA! (HDD, SSD, etc.)

SATA has been the standard connection for traditional hard drives and other internal devices for many years, mainly because (like PCIe) the port was flexible with the types of devices which could be connected to it. Also like PCIe, we have had a number of generations of SATA; the current generation is known as “SATA 6 Gb/s”. Now, at a glance, you might think that’s super fast.

But take note of the fact that you’ve got a lowercase ‘b’ in “Gb” there, which means that its name refers to Gigabits per second (Gb) instead of Gigabytes per second (GB). One GB is eight Gb, so SATA’s 6Gb/s converts over to 750 MB/s; and the actual data transfer rate bandwidth supported by the port is even somewhat lower than that, at 600 MB/s. What’s in a name, eh?

SATA HDD

Now in truth, your standard HDD doesn’t really worry about that limit, because it doesn’t even come close. If you take one of the all-time popular HDDs from the Seagate Barracuda lineup, their 1-3TB models have a maximum read rate of 210 MB/s.

SATA SSD

However, if you then switch onto a popular SSD, like the Crucial MX500, those models have a maximum read rate of 560 MB/s—so you’re starting to get up to the limitations of the SATA port, which is why manufacturers are heavily promoting the M.2 drives going forward. This is also why the general saying is that standard SATA SSDs just add a little bit of noticeable spring into your system, rather than the impressive leap up from a SATA HDD to an M.2 SSD.

And Finally, External I/O connections (HDMI, DisplayPort, USB, etc.)

This last section is just for ports you would normally use to plug in external devices into your PC, including USB, Thunderbolt, HDMI, and DisplayPort. Just like the examples above, each of these has a maximum bandwidth as they conform to their own set of standards, which can become troublesome depending on what you are plugging into them. You can even get USB’s personalised if you fancied it. My friend got some great Custom USB Cables.

HDMI

For anyone with a TV, HDMI has become the standard for sending high-quality video and audio through a single cable to a TV. Where a lot of systems fall down is that either the monitor or TV you to which they are transmitting supports the HDMI 1.4 revision and not the improved 2.0 version. Now the 1.4 version is fine for TV, as it supports 4K resolution at 30fps and 3D.

However, HDMI 1.4 lacks 2.0’s improved color space support as well as superior data transfer rate bandwidth (2.25 GB/s or 2,250 MB/s) which then opens up UHD frame rates and resolutions, as well as HDR video. This makes HDMI 2.0 more of a requirement for video production setups.

DisplayPort

By comparison, DisplayPort has always been a PC-focused connection. Just from a bandwidth perspective, the current version peaks at a data transfer rate of 4 GB/s (4,000 MB/s) and, like its HDMI counterpart, has a high level of color format and depth support, as well as high audio sample rate support.

Now, it is worth noting that you can only get the stated performance with a graded cable, so you have to watch out for that if you’re using newer hardware with older cables.

As for HDMI vs. DisplayPort, for the purists who always need the highest color quality, resolution, and frame rates—the pro market has leaned more on the DisplayPort format. However, general consumer devices stick with HDMI because of its high adoption rate (it’s compatible with almost all modern technology).

USB

USB is a fun one—only because you can get different sizes of the port as well as different capabilities. Port size aside, however, the current “top” revision for USB is 3.2, which gives a maximum data transfer rate of 2.5 GB/s (2,500 MB/s). This is great for users with fast internal drives, as—so long as you have external drives compatible with USB 3.2—you can essentially transfer huge files over from a portable drive to internal very quickly and be off and running straight away. It’s great for people like me who regularly bring back big video files from university to use on their insanely overpowered PCs!

What about Thunderbolt?

See, now this is Apple being brave (or whatever that recent quote was about the headphone jack—I’m not bitter, honest). In truth, this is an Intel development for Apple, and the current version uses a USB-C connection size (versions 1 and 2 used a mini DisplayPort connection). This brings a lot of considerations from other connections back around as, in a nutshell, Thunderbolt combines PCIe, DisplayPort, and increased power all into one handy connection. Because of what it handles through one controller, its data transfer rate bandwidth is a mighty 5 GB/s (5,000 MB/s). This means that in theory you can have one external device running at twice the speed of a USB 3.2 drive, or you can do Apple’s favourite recent pastime: get a load of dongles and connect up multiple devices and monitors (potentially up to 6 per port) at once in a daisy chain.

So There You Have It!

That was my (not so) brief breakdown of current data transfer rates across different common components, devices, and connections!

If you want more information on a port or want to ask us about something not mentioned here, then drop a comment below.

If you want to see our build recommendations for PCs on different budgets, check them out here!